Image Segmentation in 3LC¶

What is Image Segmentation?¶

Image segmentation is a fundamental computer vision task that divides images into meaningful parts by assigning labels to individual pixels. This enables machines to understand the content and structure of images at a detailed level. 3LC supports two main types of segmentation:

Semantic Segmentation: Assigns class labels to pixels (e.g., all ‘car’ pixels get same label), focusing on what objects are present in the image

Instance Segmentation: Distinguishes between instances of the same class (e.g., each car gets unique mask), enabling counting and individual object analysis

Common Data Formats¶

In real-world applications, segmentation data comes in various formats. 3LC supports most common formats used in the industry, making it easy to work with existing datasets and annotations:

Input Data Type |

Task |

3LC Supported |

Example |

|---|---|---|---|

Single-channel masks |

Semantic |

✓ |

Grayscale PNG |

Color-coded RGB/RGBA masks |

Semantic |

✗ |

RGB/RGBA PNG |

Binary mask per instance |

Instance |

✓ |

numpy arrays, bitmaps, .. |

YOLO text files |

Instance |

✓ |

|

COCO polygon lists |

Instance |

✓ |

|

COCO RLE format |

Instance |

✓ |

|

Custom RLE formats |

Instance |

✓ |

E.g. (run-offset, run-length) pairs |

Working with Segmentation in 3LC¶

The first step to working with segmentation data in 3LC is to create Tables. This can be done manually, using one of the importer Table types, or automatically through one of our integrations.

3LC provides several tutorials covering various aspects of working with segmentation data. Whether you’re starting with raw masks, COCO annotations, or custom formats, there’s a tutorial to help you get started:

Tutorial |

Task |

Dataset |

Description |

|---|---|---|---|

Semantic |

ADE20K subset |

Manually creates a semantic segmentation table from a set of masks |

|

Semantic |

ADE20K subset |

Fine-tunes a SegFormer model using Pytorch |

|

Semantic |

Balloons |

Fine-tunes a SegFormer model using Pytorch Lightning |

|

Instance |

COCO128 |

Creates an instance segmentation table from a COCO annotations file |

|

Instance |

COCO128 |

Manually creates a instance segmentation table from polygon lists |

|

Instance |

COCO128 |

Manually creates a instance segmentation table from a set of masks |

|

Instance |

LIACI Underwater Ship Inspection |

Creates a instance segmentation table from multiple bitmap images |

|

Instance |

Sartorius Cell Segmentation |

Creates a instance segmentation table from a custom RLE format |

|

Instance |

ADE20K subset |

Converts a semantic segmentation table (PNG grayscale images) into a instance segmentation table |

|

Instance |

COCO128 |

Uses SAM to generate segmentation masks from images with bounding box annotations |

|

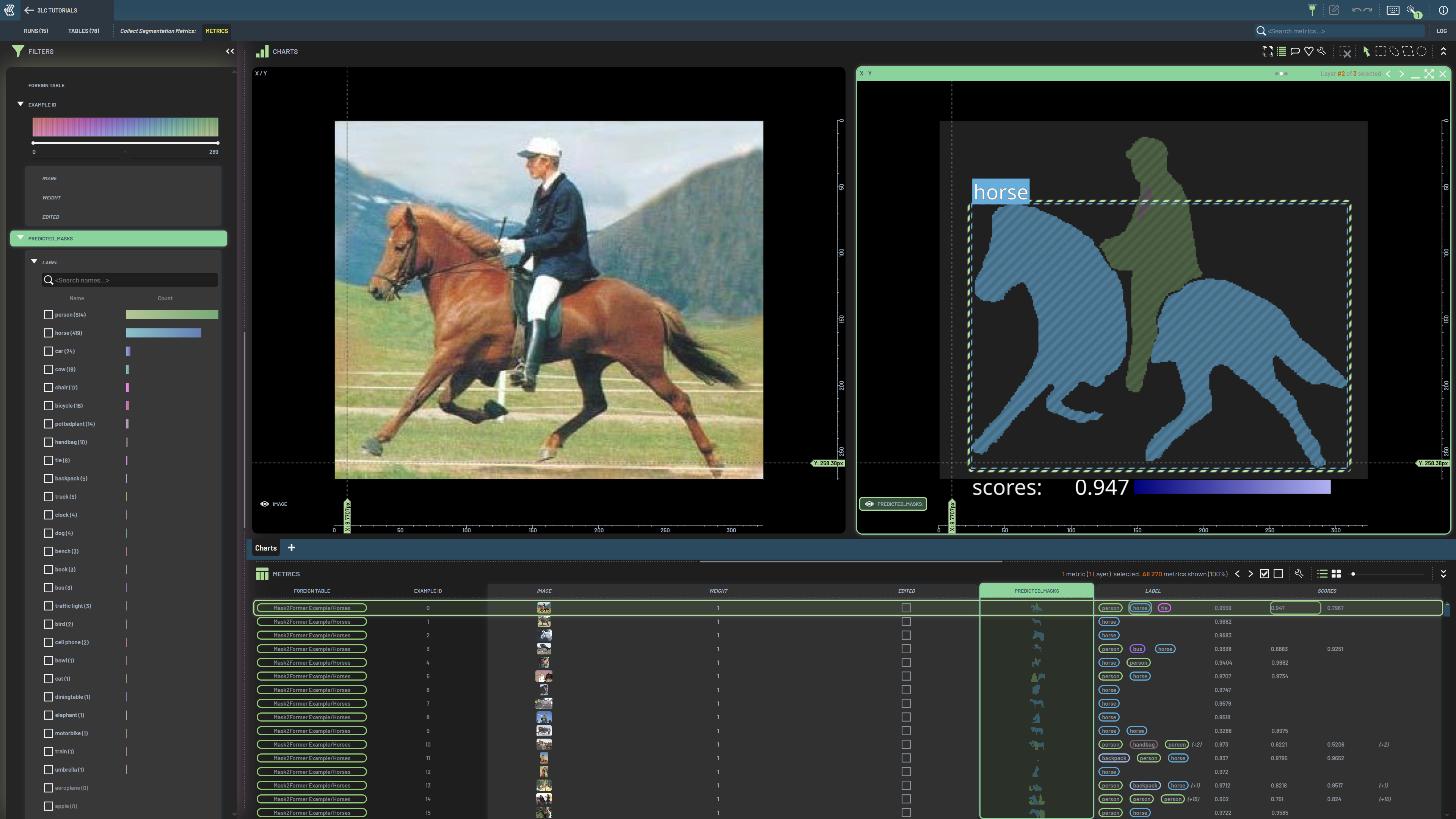

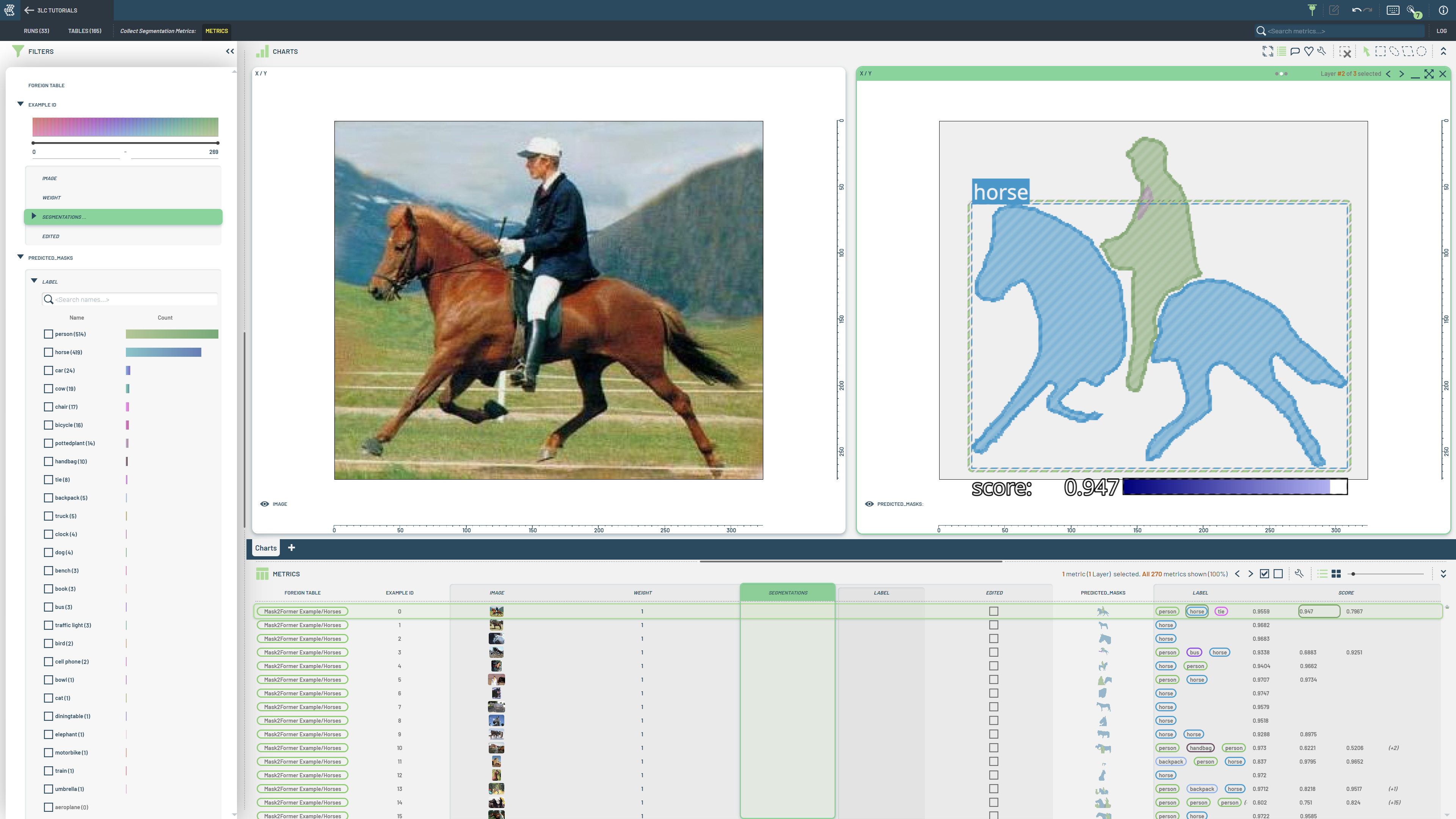

Instance |

COCO128 |

Collects instance segmentation masks from a pretrained Mask2Former model |

Once your data is ready in 3LC, you can use it together with your favorite model and framework.

Working with Runs and Tables with segmentation data in the 3LC Dashboard is very similar to working with bounding box data, which is covered in-depth in the Working with Bounding Boxes and Masks How-To guide.

Supported Models and Frameworks¶

3LC is designed to be model and framework agnostic, allowing you to use your preferred tools with minimal effort. We provide examples and integrations with popular frameworks including:

SAM (Segment Anything Model): State-of-the-art foundation model for segmentation

YOLO: Popular instance segmentation framework (https://github.com/3lc-ai/3lc-ultralytics)

Hugging Face Transformers: Access to various pre-trained models, datasets and trainers

Detectron2: Meta’s computer vision library

Format Conversion Details¶

At its core, 3LC uses the COCO RLE (Run-Length Encoding) format as the internal format for storage and transfer. When data is requested in the sample-view, it can be converted to masks or polygons. Depending on the original data format, conversion might be required.

When working with segmentation data in 3LC, you should be aware of these key aspects:

Lossless Conversions: Conversion between masks and RLE format is completely lossless

Polygon Handling: Multi-polygon instances are automatically merged into single polygon paths

Edge Cases: Some information loss may occur when merging multi-polygon instances, but this rarely affects training outcomes, as the model will be trained on rasterized masks.

Implementation: We use proven implementations from pycocotools and OpenCV for format conversions

API: The SegmentationHelper class provides a set of helper functions for working with different segmentation formats.

The diagram below illustrates how the data is stored and converted:

Additional Resources¶

For deeper understanding and reference, we recommend these resources:

COCO Dataset: The standard benchmark for instance segmentation

pycocotools: Essential tools for working with COCO format

YOLO Segmentation: Real-time segmentation guide

Hugging Face Segmentation Guide: Working with transformer-based models