.ipynb

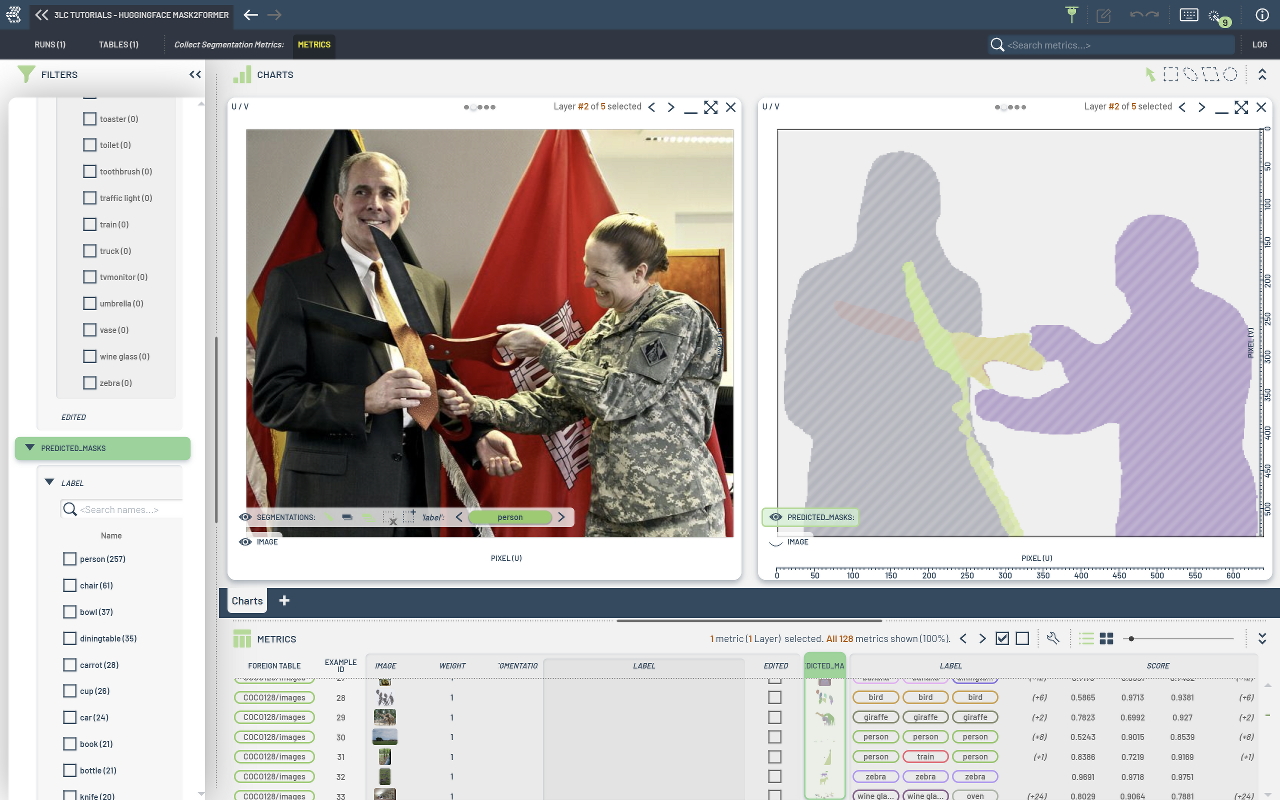

Collect Instance Segmentation Metrics from Pretrained Model¶

In this example, we will collect predicted instance segmentation masks from a pretrained model from the Hugging Face Hub.

The model we will use is Mask2Former, and the metrics will be collecting using the `tlc.collect_metrics <https://docs.3lc.ai/3lc/latest/apidocs/tlc/tlc.client.torch.metrics.collect.html#tlc.client.torch.metrics.collect.collect_metrics>`__ function.

Metrics will be collected on a Table of images from the COCO128 dataset, but any image folder can be used.

Install dependencies¶

[ ]:

%pip install 3lc[huggingface]

%pip install git+https://github.com/3lc-ai/3lc-examples.git

Imports¶

Setup Project¶

[ ]:

PROJECT_NAME = "3LC Tutorials - HuggingFace Mask2Former"

DATASET_NAME = "COCO128"

TABLE_NAME = "images"

DATA_PATH = "../../data"

HF_MODEL_ID = "facebook/mask2former-swin-tiny-coco-instance"

Load the Model¶

Load a small Mask2Former model fine-tuned on COCO.

[ ]:

model = Mask2FormerForUniversalSegmentation.from_pretrained(HF_MODEL_ID)

device = infer_torch_device()

model.to(device)

[ ]:

image_processor: Mask2FormerImageProcessor = AutoImageProcessor.from_pretrained(

HF_MODEL_ID,

use_fast=False,

do_rescale=False,

)

Create the Table¶

Create the Table to run inference on. Note we add an empty extra column for “segmentations”, which can be used as a target for accepting predictions when analyzing the Run in the 3LC Dashboard.

[ ]:

image_folder = Path(DATA_PATH) / "coco128" / "images"

assert image_folder.exists(), f"Image folder does not exist: {image_folder}"

[ ]:

# Create a value map from the model's label mapping

value_map = {k: tlc.MapElement(v) for k, v in model.config.id2label.items()}

[ ]:

table = tlc.Table.from_image_folder(

image_folder,

include_label_column=False,

table_name=TABLE_NAME,

dataset_name=DATASET_NAME,

project_name=PROJECT_NAME,

extra_columns={

"segmentations": tlc.InstanceSegmentationMasks(

"segmentations",

instance_properties_structure={

"label": tlc.CategoricalLabel("label", value_map),

},

)

},

)

Prepare the Table for inference by converting the images to tensors and adding the original size of the image, which will be used to resize the predicted masks to the original size of the image.

[ ]:

def table_map(sample):

img_tensor = T.ToTensor()(sample.convert("RGB"))

inputs = image_processor(images=img_tensor, return_tensors="pt")

inputs["pixel_values"] = inputs["pixel_values"].squeeze(0)

inputs["original_size"] = torch.tensor([sample.height, sample.width])

return dict(inputs)

table.map(table_map)

Define the Metrics Collector¶

Define the metrics collector function. This function will be called with a batch of images and the the outputs from the model. The function returns a dictionary of lists with the predicted masks in 3LC segmentation format.

[ ]:

def collect_fn(batch, predictor_output):

original_sizes = [(int(h), int(w)) for h, w in batch["original_size"]]

results = image_processor.post_process_instance_segmentation(

predictor_output.forward,

target_sizes=original_sizes,

return_binary_maps=True,

)

predicted_instances = []

for result, (height, width) in zip(results, original_sizes):

masks = result["segmentation"]

labels = [i["label_id"] for i in result["segments_info"]]

scores = [i["score"] for i in result["segments_info"]]

if masks is not None:

masks = (

np.expand_dims(masks.cpu().numpy(), axis=2)

if len(masks.shape) == 2

else masks.cpu().numpy().transpose(1, 2, 0)

)

masks = masks.astype(np.uint8)

else:

masks = np.zeros((0, height, width), dtype=np.uint8)

instances = {

"image_height": height,

"image_width": width,

"masks": masks,

"instance_properties": {"label": labels, "score": scores},

}

predicted_instances.append(instances)

return {"predicted_masks": predicted_instances}

metrics_collector = tlc.FunctionalMetricsCollector(

collect_fn,

column_schemas={

"predicted_masks": tlc.InstanceSegmentationMasks(

"predicted_masks",

instance_properties_structure={

"label": tlc.CategoricalLabel("label", value_map),

"score": tlc.IoU("score"),

},

is_prediction=True,

),

},

compute_aggregates=False,

)

Collect Metrics¶

Create a Run and collect the segmentation metrics from the model.

[ ]:

run = tlc.init(project_name=PROJECT_NAME, run_name="Collect Segmentation Metrics")

tlc.collect_metrics(

table,

metrics_collector,

model,

collect_aggregates=False,

dataloader_args={"batch_size": 4},

)

run.set_status_completed()