.ipynb

Create Custom Oriented Bounding Box Table¶

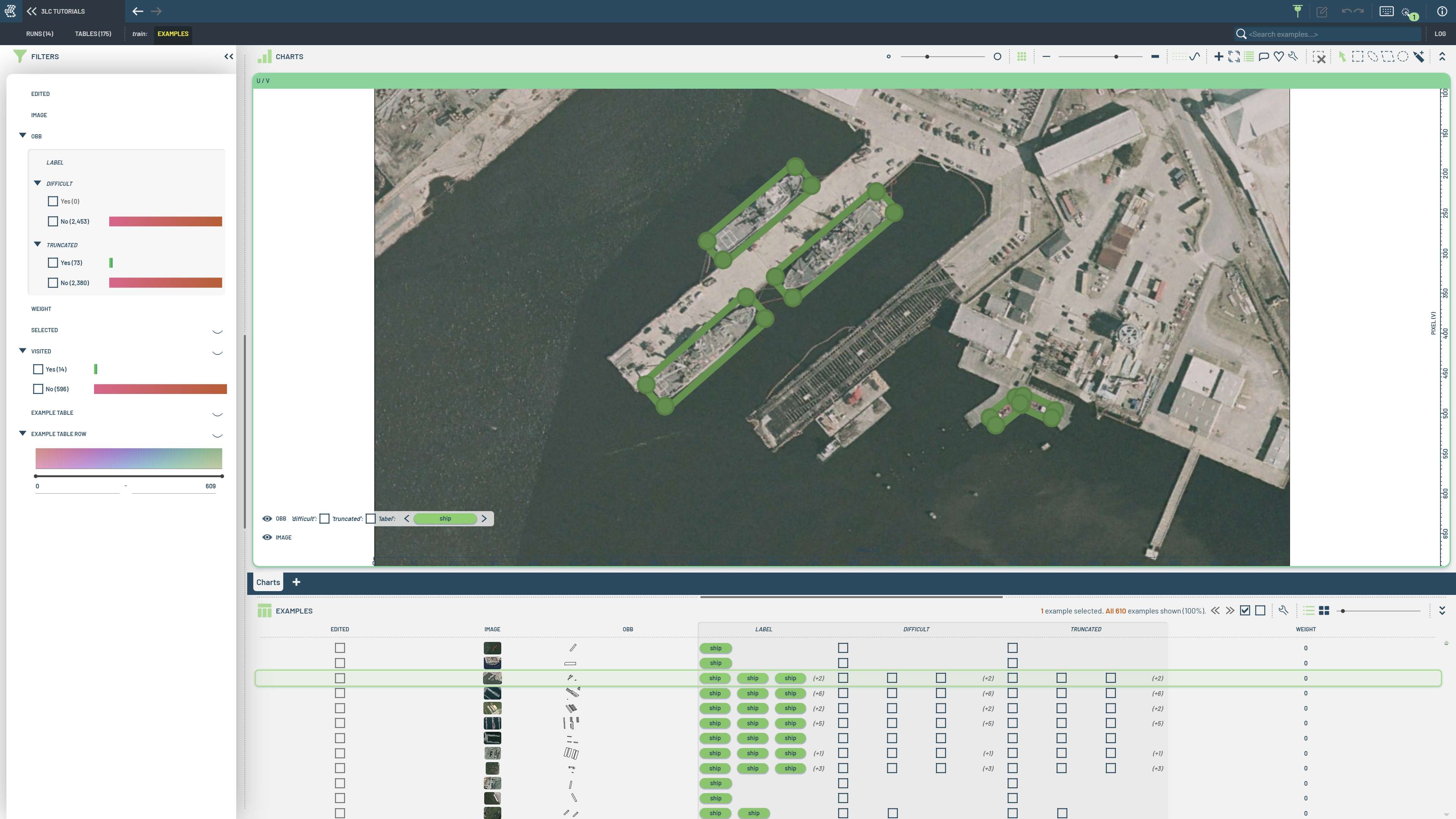

Create a 3LC Table with oriented bounding boxes using the HRSC2016-MS maritime ship detection dataset for rotated object detection.

Standard axis-aligned bounding boxes can’t capture rotated objects efficiently. Oriented bounding boxes provide precise localization for rotated objects like ships, text, and aerial vehicles, reducing background noise and improving detection accuracy.

This notebook processes the HRSC2016-MS dataset containing 1070 images with 4406 annotated ships. We demonstrate creating custom oriented bounding box annotations with rotation angles, showing how to handle non-standard coordinate systems and rotation representations. The dataset comes from remote sensing research and provides challenging examples of rotated ship detection in optical satellite imagery, making it ideal for testing oriented detection algorithms.

Project setup¶

[ ]:

PROJECT_NAME = "3LC Tutorials - OBBs"

DATASET_NAME = "HRSC2016-MS"

DOWNLOAD_PATH = "../../../transient_data"

Install dependencies¶

[ ]:

%pip install -q gdown

%pip install -q 3lc

[ ]:

import zipfile

from pathlib import Path

import gdown

DATASET_ROOT = Path(DOWNLOAD_PATH) / "HRSC2016-MS"

if not DATASET_ROOT.exists():

dst = Path(DOWNLOAD_PATH) / "hrsc2016-ms.zip"

gdown.download("https://drive.google.com/uc?id=1UslulCCx8GoTflm1gpfIGZeXIsCAdMG5", dst, quiet=False)

with zipfile.ZipFile(dst, "r") as zip_ref:

zip_ref.extractall(DOWNLOAD_PATH + "/" + "HRSC2016-MS")

# Remove the zipfile after extracting

if Path(dst).exists():

Path(dst).unlink()

else:

print(f"Dataset already downloaded to {DATASET_ROOT}")

Imports¶

[ ]:

import xml.etree.ElementTree as ET

from collections import defaultdict

from pathlib import Path

import tlc

Prepare the data¶

The data can be downloaded as a 2.3 GB zip file from the dataset’s GitHub repository. When unzipped, it has the folder structure:

HRSC2016-MS/

├── Annotations/

├── AllImages/

└── ImageSets/

[ ]:

DATASET_ROOT = Path(DOWNLOAD_PATH) / "HRSC2016-MS"

[ ]:

row_data = defaultdict(dict)

for split in ["train", "val"]:

image_splits = Path(DATASET_ROOT) / "ImageSets" / f"{split}.txt"

image_ids = image_splits.read_text().splitlines()

row_data[split] = defaultdict(list)

for image_id in image_ids:

image_path = Path(DATASET_ROOT) / "AllImages" / f"{image_id}.bmp"

annotation_path = Path(DATASET_ROOT) / "Annotations" / f"{image_id}.xml"

if not image_path.exists():

print(f"Image {image_id} does not exist")

if not annotation_path.exists():

print(f"Annotation {image_id} does not exist")

row_data[split]["image"].append(tlc.Url(image_path).to_relative().to_str())

row_data[split]["obb"].append(annotation_path)

[ ]:

def load_obb_annotation(annotation_path):

"""Load annotations for a single image from XML format."""

tree = ET.parse(annotation_path)

root = tree.getroot()

width = int(root.find("size").find("width").text)

height = int(root.find("size").find("height").text)

obbs = tlc.OBB2DInstances.create_empty(

image_height=height, image_width=width, instance_extras_keys={"difficult", "truncated"}

)

for obj in root.findall("object"):

difficult = int(obj.find("difficult").text)

truncated = int(obj.find("truncated").text)

bbox = obj.find("robndbox")

cx, cy, w, h, angle = (float(bbox.find(tag).text) for tag in ["cx", "cy", "w", "h", "angle"])

obbs.add_instance(

obb=[cx, cy, w, h, angle],

label=0, # single class dataset—all instances are ships

instance_extras={

"difficult": difficult,

"truncated": truncated,

},

)

return obbs.to_row()

Create the tables¶

We use a OrientedBoundingBoxes2DSchema to describe the structure of the oriented bounding boxes, and from_dict to create the Tables.

[ ]:

schemas = {

"image": tlc.ImageUrlSchema(),

"obb": tlc.OrientedBoundingBoxes2DSchema(

classes=["ship"],

per_instance_schemas={

"difficult": tlc.BoolListSchema(),

"truncated": tlc.BoolListSchema(),

},

),

}

[ ]:

for split in ["train", "val"]:

table = tlc.Table.from_dict(

data=row_data[split],

structure=schemas,

table_name=f"{split}",

dataset_name=DATASET_NAME,

project_name=PROJECT_NAME,

if_exists="rename",

)

[ ]:

table