Bulk Data¶

Bulk data refers to data that is too large to be included in the serialized version of a 3LC Table. Instead, the data is stored in separate files on disk, and the references are stored in the Table as URLs. When a Table is viewed in the 3LC Dashboard, only the required data is requested from the Object Service, which leads to faster loading times and lower memory usage.

Example¶

The most common bulk data in 3LC is images. The following simple example shows how to create a Table with a

single column of images named "image".

import tlc

table = tlc.Table.from_dict(

data={"image": ["path/to/image0.png", ...]},

structure=tlc.Schema(values={"image": tlc.ImageUrlSchema()}),

)

table[0]

# {"image": "path/to/image0.png"}

The Schema declaration is what lets 3LC know that the image path strings represent bulk data. When opened in the Dashboard, the image data can therefore be requested and visualized on the fly.

SampleType¶

When defining the Schema of a Table using SampleTypes, it might

represent the conversion from and to anonymous (and possibly large) Python objects in the Sample view. In these

cases 3LC will set aside the data on disk in a Project level bulk data directory, and only store a reference in the

Table as a relative path inside the project. The following example shows this behavior for PILImages.

import numpy as np

from PIL import Image

import tlc

# Create a list of 5 random RGB PIL Images (100x100)

samples = [Image.fromarray((np.random.rand(100, 100, 3) * 255).astype("uint8")) for _ in range(5)]

table = tlc.Table.from_torch_dataset(

dataset=samples,

structure=tlc.PILImage(name="image"),

project_name="SampleType Bulk Data Demo",

)

# Access the Sample View for the first row

table[0]

# <PIL.PngImagePlugin.PngImageFile image mode=RGB size=100x100 at 0x31B775590>

# Access the Row View of the first row

table.table_rows[0]

# ImmutableDict({'image': '../../bulk_data/samples/table/image/0000000.png', 'weight': 1.0})

The same pattern of setting aside bulk data is applied for some other SampleTypes, such as

tlc.NumpyArray and

tlc.TorchTensor. For a complete overview, see

tlc.client.sample_type.

Geometry¶

Experimental Feature

Geometry bulk data is an experimental feature. APIs and usage patterns may change in the future.

Example¶

The following example shows how to store a single point cloud in a 3LC Table using geometry bulk data.

import numpy as np

import tlc

from tlc.core.helpers.bulk_data_helper import BulkDataAccessor

# Define the bounds of the point cloud

bounds = (-100, -100, -100, 100, 100, 100)

# Create a point cloud

points = np.random.rand(1000, 3).astype(np.float32)

# Define a schema for the column containing the point cloud

schema = tlc.Geometry3DSchema(

include_3d_vertices=True,

is_bulk_data=True,

)

# Create a Geometry3DInstances object for the point cloud

instances = tlc.Geometry3DInstances.create_empty(*bounds)

instances.add_instance(points)

# Create a TableWriter for writing the point cloud

writer = tlc.TableWriter(

table_name="point_cloud",

project_name="point_cloud_project",

column_schemas={"points": schema},

)

# Write the point cloud to disk

writer.add_row({"points": instances.to_row()})

table = writer.finalize()

# The bulk data is stored in the Table's bulk_data_url property.

print(table.bulk_data_url)

# Url('relative://../../bulk_data/samples/point_cloud')

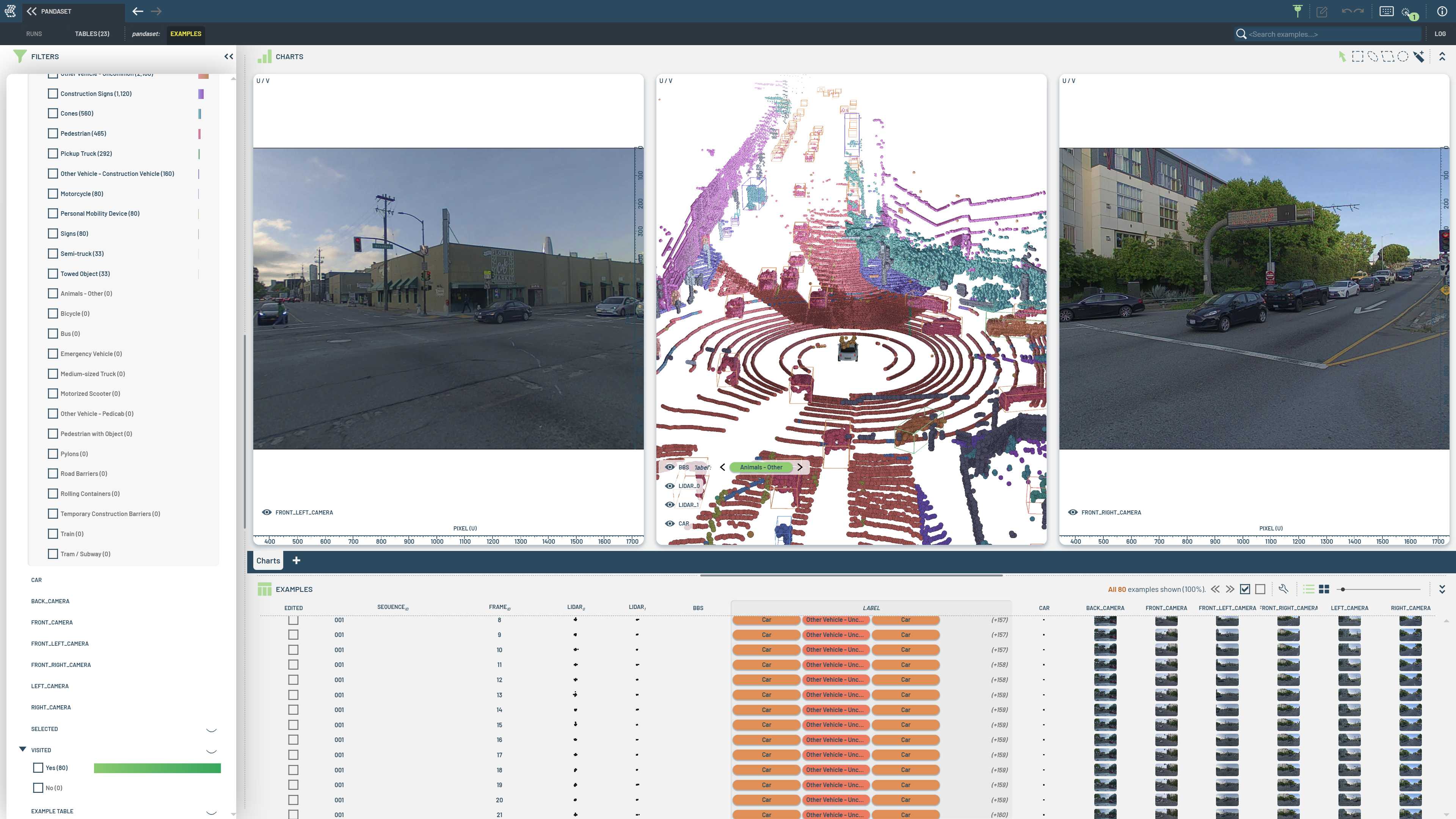

# The Table can now be loaded and visualized in the 3LC Dashboard.

# To access the point cloud data in Python, use a BulkDataAccessor

accessor = BulkDataAccessor(table)

row = accessor[0]

points_reloaded = tlc.Geometry3DInstances.from_row(row["points"])

# The data read back is now a flattened array of floats.

np.testing.assert_array_equal(points.reshape(-1), points_reloaded.vertices[0])

Limitations¶

Geometry bulk data is currently limited to vertices, lines, and triangles. When creating such a bulk data Table, the data will be cached in a binary format on disk. Writing bulk data Tables directly to object storage is not supported, but a bulk data Table can be copied directly to a remote location and will be accessible from the new location without any additional configuration (beyond configuring the Object Service to scan the new location).

Storage Size

Ingesting bulk data requires additional storage, so the size of the data should be considered. Monitor the size of the cached data and manage bulk data folders carefully to avoid running out of space.